How not to run an ultramarathon

Aging makes running harder: recovery slows, endurance wanes, and managing weight is tougher. Stress from increased work responsibilities compounds these challenges. My last job pushed my stress levels high, prompting me to seek relief through distance running—a hardship chosen and controlled, providing a welcome contrast to life’s uncontrollable demands.

In that spirit, I did a lot of running and improved a lot, but I ran a pretty bad 50k in the cowtown Ultra (a bad run is one where I walk). The fun of messing up is that I can use my mind, not just my body — and figure out what went wrong and how to fix it. First of all, I totally value coaching and don’t think it’s a great idea to always be my own coach. Something that pushes me to my limits, like a full Ironman, should really engage professional coaching and more direct coaching would have helped here. That said, I like to be smart on this stuff and I hope my notes and lessons learned are helpful. I certainly learned a lot.

I should know what I’m doing, how to train and how to run. I have run 10 marathons before with the best around 3 hours and trained with the cowtown trailblazers. I did every long run and most of the speed work. So what happened?

Two things: training volume and nutrition.

First I trained very well for a marathon. I felt fine at 25 miles. I did long runs, speed work and marathon nutrition. I didn’t do the ultra things. I didn’t get enough slow long runs in. I didn’t run back to back long runs. I didn’t get high enough weekly volume. I needed to run a lot of slow 10 mile runs in what otherwise were rest days. On top of that I didn’t taper, I stopped two weeks before the race. I changed jobs and cities two weeks before the ultra when snow storms took out the running trails in DC and wasn’t used to long runs on treadmills. In short, an ultramarathon puts the body under stress for a prolonged period, beyond what training simulates. While the long runs built a base, they may not have been long enough to prepare my muscles for continuous, fatigue-resistant performance.

Second, I’m just figuring out endurance nutrition. I’ve had excellent advice but have been too busy with job transition to figure nutrition out. More on that below.

So, to understand how I came to this conclusion, let’s first at my training volume.

Training

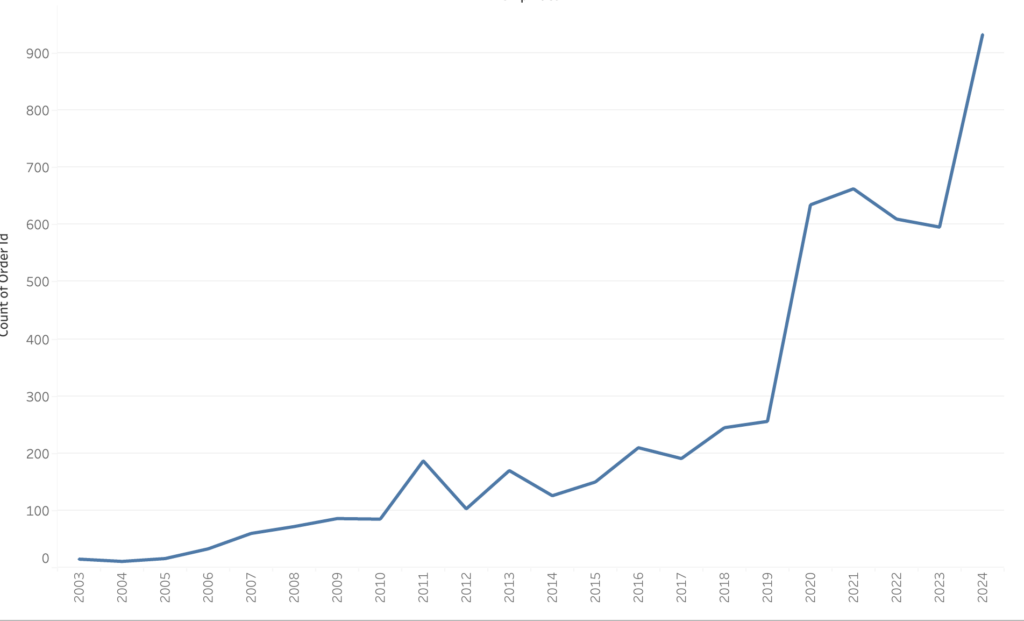

I felt pretty good about my training, but an analysis of training log shows a handful of very long efforts (some over 20–30 miles) interspersed with long stretches of very short runs. While I did have consistent big runs—19, 20, 22, 27, 31 miles—those were surrounded by lots of 2–5 mile outings. In principle, shorter runs can be useful for recovery or speed work, but ultra training typically thrives on consistent, moderate‐to‐long runs that gradually build up overall endurance and durability (see Fitzgerald, 80/20 Running or Daniels, Daniels’ Running Formula).

Net net, my training suffered from:

• Too many “small bites,” not enough consistent moderate mileage: Most experienced ultrarunners aim for a weekly volume where the “short days” are still 5–8 miles, the medium days are 10–15, and the big days push over 20. My log often jumps from 2‐ or 3‐mile runs straight to 19+ miles with little in between.

• Sporadic vs. systematic approach: Ideally I’d structure my training in blocks—periods of progressive mileage plus “cutback” weeks—rather than random “short‐short‐short‐BIG” patterns. This ensures adaptation and reduces the risk of overuse or meltdown. It’s true, I planned my long runs but the other days were just what kinda fit in.

• My cold turkey training stop two weeks before the race: I learned that I can’t get faster in two weeks so I let my busy life/new job stop me from working out two weeks before the race. Bad idea, this is called “detraining” and it kinda wastes a lot of hard work. Turns out I have to keep the engine up to prevent a measurable decline in VO₂ max, enzyme activity (especially those related to aerobic metabolism), neuromuscular coordination, and running economy (see Neufer, 1989; Coyle et al., 1984).

Research shows VO₂ max can start dropping after about 7–14 days of rest (Coyle et al., 1984; Mujika & Padilla, 2000). Also enzymes like citrate synthase (key for aerobic energy production) and PFK (glycolytic pathway) can decline with reduced training stimulus (Green et al., 1999). A moderate volume of continued running helps maintain plasma volume, crucial for thermoregulation and sustaining cardiac output (Convertino, 1991). If training load suddenly crashes, plasma volume can drop, which can compromise heat dissipation and endurance.

What can happen in two weeks? I found out it’s true that new major physiological adaptations (e.g., boosting mitochondrial density or capillarization) generally take longer than two weeks to develop. However, fine‐tuning muscle glycogen storage, reactivity to high‐intensity work, and freshening up are real gains that can happen within 1–2 weeks. Turns out that structured tapers are designed to optimize these short‐term changes: repletion of glycogen stores, healing of micro‐tears, and a slight boost to neuromuscular function (Mujika & Padilla, 2003).

For more on this see Fitzgerald, M. (2014). 80/20 Running. New York: Plume and Daniels, J. (2005). Daniels’ Running Formula (2nd ed.). Human Kinetics.

But did I mess up the pace?

I did my research here, but probably got this wrong. Based on my training times, I was going to run as slow as possible while still feeling like I was running. From all my research, this should be around 8:30. I consulted everything from Jack Daniels VDOT tables to McMillan running calculator, but I mostly used the Peter Riegel formula which estimates the time \(T_2\) for any new race distance \(D_2\), given a known time \(T_1\) for a distance D_1:

$$T_2 \;=\; T_1 \times \left(\frac{D_2}{D_1}\right)^{1.06}$$

Where \(T_1\) is time (in minutes, hours, or seconds—just be consistent) for the known distance \(D_1\). \(T_2\) is your predicted time for the new distance \(D_2\) and 1.06 is the exponent Riegel derived by fitting race performances from a wide range of distances (from the mile up to the marathon).

This worked. For the first 25 miles my pace was consistently around 8:10–8:20 per mile and I felt great. The hills didn’t slow me down at all. I told myself, just run as slow as possible. But! at mile 25 my pace dropped sharply (10:49, then 12:15, 13:39, and so on). So I hit the wall? No, even though my splits became much slower, the heart rate data remained relatively controlled (see below).

So I’m a wimp? Probably not, I have a pretty high pain tolerance and used to pushing thorough the pain cave at mile 23 in marathons. At the later miles in this race (25+), I just couldn’t run, my legs wouldn’t do it and maybe I was a bit of wimp too. From my mile splits (8:12–8:30 early on, drifting into 10+ near the end) comfort for the early miles of an ultra is often still too fast. Pacing strategies for road marathons sometimes carry over to ultras, but they can backfire over 50K, 50M, or 100K distances. Without enough easy pacing experience in runs over 20+ miles, it’s possible to gradually tear up muscle tissue and never quite realize it until high miles.

This is what happened. My heart rate did not spike or drift abnormally high at the point I started slowing dramatically, which leads me to believe it wasn’t the classic glycogen depletion (“hitting the wall”). When runners truly bonk from carbohydrate depletion, pace typically plummets while heart rate often stays elevated (as the body struggles to compensate) or shows wild cardiac drift upwards under the same pace. On other hand, I ended up forced to run/walk and my heart rate often dropped or stayed steady instead of skyrocketing. Yes, I should have run slower.

Muscle damage and micro‐trauma accumulate in ultras—especially without large volumes of mileage or back‐to‐back long runs. Eccentric loading (especially on downhills) can shred muscle fibers and cause pace to crash even if well fueled.

In the future, I should adopt a pace around 60–90 seconds per mile slower than my “easy run” pace for standard marathons, especially in the first half of an ultra. According to research on pacing in ultras (Lambert & Hoffman, 2010), disciplined starts help avoid crippling muscle damage in the second half. (See the Average Ultra Marathon Pace Guide)

Nutrition

This is an area where I should have done much better. I underestimated the calories burned. I learned the hard way that my “nutrition plan” for this ultra—just Gatorade, two gels, a small muffin, and a couple bananas—was totally inadequate. For an all‐day event like this, I needed a steady stream of carbs, something like 30–60 grams per hour (or more) to keep the tank from running on fumes (see Jeukendrup, 2014). Instead, I basically banked on a sugary sports drink, a couple gels, and some solid foods that were high in fiber and not super friendly to my stomach mid‐race. So, while I thought I was covered, the reality is that I probably didn’t get nearly enough easy‐to‐absorb carbs, and my gut was stuck trying to digest bananas and a muffin when it really needed quick fuel. It’s no wonder my energy levels tanked the moment things got tough. Fortunately, this one is easy to fix.

In the future

All together, ultra performance is about Aerobic Capacity (VO₂ max and thresholds), Muscular Endurance (high running economy, resistance to muscle damage) and Thermoregulatory Efficiency (plasma volume, sweat rate).

It’s clear that ultra finishing success often depends less on a few single monster runs and more on the steady layering of mileage each and every week. Most good ultra plans build around one long run each weekend, but they also sprinkle in 8–12 milers midweek to build durability and be ok with a slower pace. Strava doesn’t matter, the hours do. Also, a key hallmark of ultra training is doing back‐to‐back long runs (e.g., Saturday 20 miles + Sunday 12 miles) to simulate running on tired legs. Without this, I miss a prime chance to build up “muscle resilience,” so those final miles of the race can end up being forced run/walk due to sore, damaged muscles.

references

- Knechtle, B. et al. (2012). A systematic review of ultramarathon performance. Open Access Journal of Sports Medicine, 3, 55–67.

- Stellingwerff, T. (2016). Case Study: Nutrition and training periodization in three elite marathon runners. Int J Sport Nutr Exerc Metab, 26(1), 31–41.

- Fitzgerald, M. (2014). 80/20 Running. New York: Plume.

- Daniels, J. (2005). Daniels’ Running Formula (2nd ed.). Human Kinetics.

- Bosquet, L., Montpetit, J., Arvisais, D., & Mujika, I. (2007). Effects of tapering on performance: A meta‐analysis. Medicine & Science in Sports & Exercise, 39(8), 1358–1365.

- Convertino, V. A. (1991). Blood volume: Its adaptation to endurance training. Medicine & Science in Sports & Exercise, 23(12), 1338–1348.

- Coyle, E. F., Hemmert, M. K., & Coggan, A. R. (1984). Effects of detraining on cardiovascular responses to exercise: Role of blood volume. Journal of Applied Physiology, 57(6), 1857–1864.

- Green, H. et al. (1999). Adaptations in muscle metabolism to short‐term training are expressed early in submaximal exercise. Canadian Journal of Physiology and Pharmacology, 77(5), 440–444.

- Millet, G. Y. (2011). Can neuromuscular fatigue explain running strategies and performance in ultra‐marathons? Sports Medicine, 41(6), 489–506.

- Mujika, I., & Padilla, S. (2000). Detraining: Loss of training‐induced physiological and performance adaptations. Sports Medicine, 30(3), 145–154.

- Mujika, I., & Padilla, S. (2003). Scientific bases for precompetition tapering strategies. Sports Medicine, 33(13), 933–951.

- Neufer, P. D. (1989). The effect of detraining and reduced training on the physiological adaptations to aerobic exercise training. Sports Medicine, 8(5), 302–320.

- Saunders, P. U., Pyne, D. B., Telford, R. D., & Hawley, J. A. (2004). Factors affecting running economy in trained distance runners. Sports Medicine, 34(7), 465–485.

Code

SQL is powerful, I was able to get a good dataset from strava that was useful for my analysis. (I can share my code if folks care to get data from strava via python. leave a comment below and I’ll package up and share the code.)

WITH distance_intervals AS (

SELECT

workout_id,

timestamp,

distance,

heartrate,

distance - LAG(distance, 1, 0) OVER (PARTITION BY workout_id ORDER BY timestamp) AS segment_distance,

EXTRACT(EPOCH FROM (timestamp - LAG(timestamp, 1, timestamp) OVER (PARTITION BY workout_id ORDER BY timestamp))) AS segment_time

FROM workout_data_points

WHERE workout_id = 13708259837

),

binned_intervals AS (

SELECT

workout_id,

FLOOR(distance / 100) * 100 AS distance_bin,

SUM(segment_time) AS total_time_seconds,

SUM(segment_distance) / 1609.34 AS distance_miles,

AVG(heartrate) AS avg_heartrate

FROM distance_intervals

WHERE segment_distance IS NOT NULL

GROUP BY workout_id, distance_bin

),

pace_calculated AS (

SELECT

distance_bin,

total_time_seconds / 60.0 AS total_time_minutes,

CASE

WHEN distance_miles > 0 THEN (total_time_seconds / 60.0) / distance_miles

ELSE NULL

END AS pace_min_per_mile,

avg_heartrate

FROM binned_intervals

)

SELECT

distance_bin,

CASE

WHEN pace_min_per_mile IS NOT NULL

THEN

FLOOR(pace_min_per_mile)::TEXT || ':' ||

LPAD(ROUND((pace_min_per_mile - FLOOR(pace_min_per_mile)) * 60)::TEXT, 2, '0')

ELSE 'N/A'

END AS avg_pace_min_per_mile,

avg_heartrate

FROM pace_calculated

ORDER BY distance_bin;