The Connectome Scaling Wall: What Mapping Fly Brains Reveals About Autonomous Systems

In October 2024, the FlyWire consortium published the complete connectome of an adult Drosophila melanogaster brain: 139,255 neurons, 54.5 million synapses, 8,453 cell types, mapped at 8-nanometer resolution. This is only the third complete animal connectome ever produced, following C. elegans (302 neurons, 1986) and Drosophila larva (3,016 neurons, 2023). The 38-year gap between the first and third isn’t just a story about technology—it’s about fundamental scaling constraints that apply equally to biological neural networks and autonomous robotics.

A connectome is the complete wiring diagram of a nervous system. Think of it as the ultimate circuit board schematic, except instead of copper traces and resistors, you’re mapping biological neurons and the synapses that connect them. But unlike an electrical circuit you can trace with your finger, these connections exist in three-dimensional space at scales invisible to the naked eye. A single neuron might be a tenth of a millimeter long but only a few microns wide—about 1/20th the width of a human hair. The synapses where neurons connect are even tinier: just 20-40 nanometers across, roughly 2,000 times smaller than the width of a hair. And there are millions of them, tangled together in a three-dimensional mesh that makes the densest urban skyline look spacious by comparison. The connectome doesn’t just tell you which neurons exist—it tells you how they talk to each other. Neuron A connects to neurons B, C, and D. Neuron B connects back to A and forward to E. That recurrent loop between A and B? That might be how the fly remembers the smell of rotting fruit. The connection strength between neurons—whether a synapse is strong or weak—determines whether a signal gets amplified or filtered out. It’s the difference between a whisper and a shout, between a fleeting thought and a committed decision.

Creating a connectome is almost absurdly difficult. First, you preserve the brain perfectly at nanometer resolution, then slice it into thousands of impossibly thin sections—the fruit fly required 7,000 slices, each just 40 nanometers thick, about 1/1,000th the thickness of paper, cut with diamond-knife precision. Each slice goes under an electron microscope, generating a 100-teravoxel dataset where each pixel represents an 8-nanometer cube of brain tissue. Then comes the nightmare part: tracing each neuron through all 7,000 slices, like following a single thread through 7,000 sheets of paper where the thread appears as just a dot on each sheet—and there are 139,255 threads tangled together. When Sydney Brenner’s team mapped C. elegans in the 1970s and 80s, they did this entirely by hand, printing electron microscopy images on glossy paper and tracing neurons with colored pens through thousands of images. It took 15 years for 302 neurons. The fruit fly has 460 times more.

This is where the breakthrough happened. The FlyWire consortium used machine learning algorithms called flood-filling networks to automatically segment neurons, but the AI made mistakes constantly—merging neurons, splitting single cells into pieces, missing connections. So they crowdsourced the proofreading: hundreds of scientists and citizen scientists corrected errors neuron by neuron, synapse by synapse, and the AI learned from each correction. This hybrid approach—silicon intelligence for speed, human intelligence for accuracy—took approximately 50 person-years of work. The team then used additional machine learning to predict neurotransmitter types from images alone and identified 8,453 distinct cell types. The final result: 139,255 neurons, 54.5 million synapses, every single connection mapped—a complete wiring diagram of how a fly thinks.

The O(n²) Problem

Connectomes scale brutally. Going from 302 to 139,255 neurons isn’t 460× harder—it’s exponentially harder because connectivity scales roughly as n². The worm has ~7,000 synapses. The fly has 54.5 million—a 7,800× increase in edges for a 460× increase in nodes. The fly brain also went from 118 cell classes to 8,453 distinct types, meaning the segmentation problem became orders of magnitude more complex.

Sydney Brenner’s team in 1986 traced C. elegans by hand through 8,000 electron microscopy images using colored pens on glossy prints. They tried automating it with a Modular I computer (64 KB memory), but 1980s hardware couldn’t handle the reconstruction. The entire project took 15 years.

The FlyWire consortium solved the scaling problem with a three-stage pipeline:

Connectome Evolution

Mapping the Neural Universe

From 302 neurons in a tiny worm to 139,255 in a fruit fly—witness the exponential complexity that defines the frontier of neuroscience.

Stage 1: Automated segmentation via flood-filling networks

They sliced the fly brain into 7,000 sections at 40nm thickness (1/1000th the thickness of paper), generating a 100-teravoxel dataset. Flood-filling neural networks performed initial segmentation—essentially a 3D region-growing algorithm that propagates labels across voxels with similar appearance. This is computationally tractable because it’s local and parallelizable, but error-prone because neurons can look similar to surrounding tissue at boundaries.

Stage 2: Crowdsourced proofreading

The AI merged adjacent neurons, split single cells, and missed synapses constantly. Hundreds of neuroscientists and citizen scientists corrected these errors through a web interface. Each correction fed back into the training set, iteratively improving the model. This hybrid approach—automated first-pass, human verification, iterative refinement—consumed approximately 50 person-years.

Stage 3: Machine learning for neurotransmitter prediction

Rather than requiring chemical labeling for every neuron, they trained classifiers to predict neurotransmitter type (glutamate, GABA, acetylcholine, etc.) directly from EM morphology. This is non-trivial because neurotransmitter identity isn’t always apparent from structure alone, but statistical patterns in vesicle density, synapse morphology, and connectivity motifs provide signal.

The result is complete: every neuron traced from dendrite to axon terminal, every synapse identified and typed, every connection mapped. UC Berkeley researchers ran the entire connectome as a leaky integrate-and-fire network on a laptop, successfully predicting sensorimotor pathways for sugar sensing, water detection, and proboscis extension. Completeness matters because partial connectomes can’t capture whole-brain dynamics—recurrent loops, feedback pathways, and emergent computation require the full graph.

Credit: Tyler Sloan and Amy Sterling for FlyWire, Princeton University, (Dorkenwald et al., 2024)

Power Scaling: The Fundamental Constraint

Here’s the engineering problem: the fly brain operates on 5-10 microwatts. That’s 0.036-0.072 nanowatts per neuron. It enables:

- Controlled flight at 5 m/s with 200 Hz wing beat frequency

- Visual processing through 77,536 optic lobe neurons at ~200 fps

- Real-time sensorimotor integration with ~10ms latencies

- Onboard learning and memory formation

- Navigation, courtship, and decision-making

Compare this to autonomous systems:

| System | Power | Capability | Efficiency |

|---|---|---|---|

| Fruit fly brain | 10 μW | Full autonomy | 0.072 nW/neuron |

| Intel Loihi 2 | 2.26 μW/neuron | Inference only | 31× worse than fly |

| NVIDIA Jetson (edge inference) | ~15 W | Vision + control | 10⁶× worse |

| Boston Dynamics Spot | ~400 W total | 90 min runtime | – |

| Human brain | 20 W | 1 exaFLOP equivalent | 50 pFLOPS/W |

| Frontier supercomputer | 20 MW | 1 exaFLOP | 50 FLOPS/W |

The brain achieves ~10¹² better energy efficiency than conventional computing at the same computational throughput. This isn’t a transistor physics problem—it’s architectural.

Why Biology Wins: Event-Driven Sparse Computation

The connectome reveals three principles that explain biological efficiency:

1. Asynchronous event-driven processing

Neurons fire sparsely (~1-10 Hz average) and asynchronously. There’s no global clock. Computation happens only when a spike arrives. In the fly brain, within four synaptic hops nearly every neuron can influence every other (high recurrence), yet the network remains stable because most neurons are silent most of the time. Contrast this with synchronous processors where every transistor is clocked every cycle, consuming 30-40% of chip power just on clock distribution—even when idle.

2. Strong/weak connection asymmetry

The fly connectome shows that 70-79% of all synaptic weight is concentrated in just 16% of connections. These strong connections (>10 synapses between a neuron pair) carry reliable signals with high SNR. Weak connections (1-2 synapses) may represent developmental noise, context-dependent modulation, or exploratory wiring that rarely fires. This creates a core network of reliable pathways overlaid with a sparse exploratory graph—essentially a biological ensemble method that balances exploitation and exploration.

3. Recurrent loops instead of deep feedforward hierarchies

The Drosophila larval connectome (3,016 neurons, 548,000 synapses) revealed that 41% of neurons receive long-range recurrent input. Rather than the deep feedforward architectures in CNNs (which require many layers to build useful representations), insect brains use nested recurrent loops. This compensates for shallow depth: instead of composing features through 50+ layers, they iterate and refine through recurrent processing with 3-4 layers. Multisensory integration starts with sense-specific second-order neurons but rapidly converges to shared third/fourth-order processing—biological transfer learning that amortizes computation across modalities.

Neuromorphic Implementations: Narrowing the Gap

Event-driven neuromorphic chips implement these principles in silicon:

Intel Loihi 2 (2024)

- 1M neurons/chip, 4nm process

- Fully programmable neuron models

- Graded spikes (not just binary)

- 2.26 μW/neuron (vs. 0.072 nW for flies—still 31,000× worse)

- 16× better energy efficiency than GPUs on audio tasks

The Hala Point system (1,152 Loihi 2 chips) achieves 1.15 billion neurons at 2,600W maximum—demonstrating that neuromorphic scales, but still consumes orders of magnitude more than biology per neuron.

IBM NorthPole (2023)

- Eliminates von Neumann bottleneck by co-locating memory and compute

- 22 billion transistors, 800 mm² die

- 25× better energy efficiency vs. 12nm GPUs on vision tasks

- 72× better efficiency on LLM token generation

NorthPole is significant because it addresses the memory wall: in traditional architectures, moving data between DRAM and compute costs 100-1000× more energy than the actual computation. Co-locating memory eliminates this overhead.

BrainChip Akida (2021-present)

- First commercially available neuromorphic chip

- 100 μW to 300 mW depending on workload

- Akida Pico (Oct 2024): <1 mW operation

- On-chip learning without backprop

The critical insight: event-driven cameras + neuromorphic processors. Traditional cameras output full frames at 30-60 fps whether or not anything changed. Event-based cameras (DVS sensors) output asynchronous pixel-level changes only—mimicking retinal spike encoding. Paired with neuromorphic processors, this achieves dramatic efficiency: idle power drops from ~30W (GPU) to <1mW (neuromorphic).

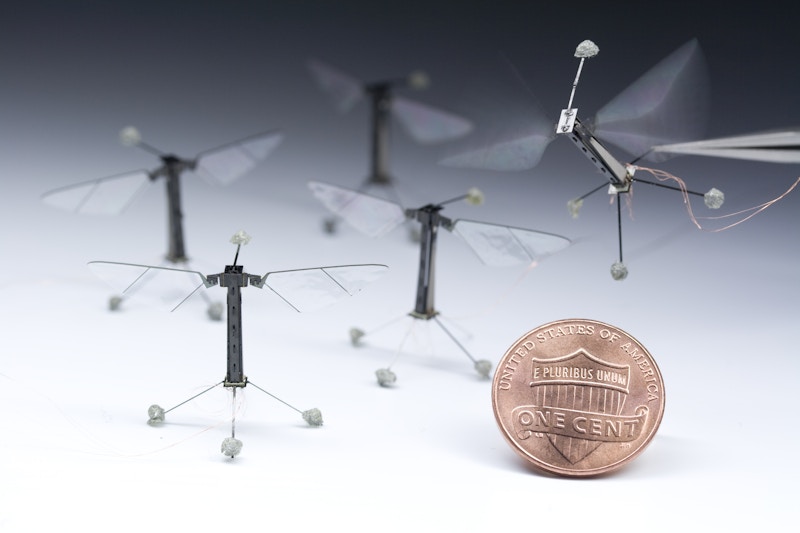

Credit: Wyss Institute at Harvard University

The Micro-Robotics Power Wall

The scaling problem becomes acute at insect scale. Harvard’s RoboBee (80-100 mg, 3 cm wingspan) flies at 120 mW. Solar cells deliver 0.76 mW/mg at full sun—but the RoboBee needs 3× Earth’s solar flux to sustain flight. This forces operation under halogen lights in the lab, not outdoor autonomy.

The fundamental constraint is energy storage scaling. As robots shrink, surface area (power collection) scales as r², volume (power demand) as r³. At sub-gram scale, lithium-ion provides 1.8 MJ/kg. Metabolic fat provides 38 MJ/kg—a 21× advantage that no battery chemistry on the roadmap approaches.

This creates a catch-22: larger batteries enable longer flight, but add weight requiring bigger actuators drawing more power, requiring even larger batteries. The loop doesn’t converge.

Alternative approaches:

- Cyborg insects: Beijing Institute of Technology’s 74 mg brain controller interfaces directly with bee neural tissue via three electrodes, achieving 90% command compliance. Power: hundreds of microwatts for control electronics. Propulsion: biological, running on metabolic fuel. Result: 1,000× power advantage over fully robotic micro-flyers.

- Chemical fuels: The RoBeetle (88 mg crawling robot) uses catalytic methanol combustion. Methanol: 20 MJ/kg—10× lithium-ion density. But scaling combustion to aerial vehicles introduces complexity (fuel pumps, thermal management) at millimeter scales.

- Tethered operation: MIT’s micro-robot achieved 1,000+ seconds of flight with double aerial flips, but remains tethered to external power.

For untethered autonomous micro-aerial vehicles, current battery chemistry makes hour-long operation physically impossible.

The Dragonfly Algorithm: Proof of Concept

The dragonfly demonstrates what’s possible with ~1 million neurons. It intercepts prey with 95%+ success, responding to maneuvers in 50ms:

- 10 ms: photoreceptor response + signal transmission

- 5 ms: muscle force production

- 35 ms: neural computation

That’s 3-4 neuron layers maximum at biological signaling speeds (~1 m/s propagation, ~1 ms synaptic delays). The algorithm is parallel navigation: maintain constant line-of-sight angle to prey while adjusting speed. It’s simple, fast, and works with 1/100th the spatial resolution of human vision at 200 fps.

The dragonfly’s visual system contains 441 input neurons feeding 194,481 processing neurons. Researchers have implemented this in III-V nanowire optoelectronics operating at sub-picowatt per neuron. The human brain averages 0.23 nW/neuron—still 100,000× more efficient than conventional processors per operation. Loihi 2 and NorthPole narrow this to ~1,000× gap, but the remaining distance requires architectural innovation, not just process shrinks.

Distributed Control vs. Centralized Bottlenecks

Insect nervous systems demonstrate distributed control:

- Spinal reflexes: 10-30 ms responses without brain involvement

- Central pattern generators: rhythmic movements (walking, flying) produced locally in ganglia

- Parallel sensory streams: no serialization bottleneck

Modern autonomous vehicles route all sensor data to central compute clusters, creating communication bottlenecks and single points of failure. The biological approach is hierarchical:

- Low-level reactive control: fast (1-10 ms), continuous, local

- High-level deliberative planning: slow (100-1000 ms), occasional, centralized

This matches the insect architecture: local ganglia handle reflexes and pattern generation, brain handles navigation and decision-making. The division of labor minimizes communication overhead and reduces latency.

Scaling Constraints Across Morphologies

The power constraint manifests predictably across scales:

Micro-aerial (0.1-1 g): Battery energy density is the hard limit. Flight times measured in minutes. Solutions require chemical fuels, cyborg approaches, or accepting tethered operation.

Drones (0.1-10 kg): Power ∝ v³ for flight. Consumer drones: 20-30 min. Advanced commercial: 40-55 min. Headwinds cut range by half. Monarch butterflies migrate thousands of km on 140 mg of fat—200× better mass-adjusted efficiency.

Ground robots (10-100 kg): Legged locomotion is 2-5× less efficient than wheeled for the same distance (constantly fighting gravity during stance phase). Spot: 90 min runtime, 3-5 km range. Humanoids: 5-10× worse cost of transport than humans despite electric motors being more efficient than muscle (90% vs. 25%). The difference is energy storage and integrated control.

Computation overhead: At large scale (vehicles drawing 10-30 kW), AI processing (500-1000W) is 5-10% overhead. At micro-scale, computation dominates: a 3cm autonomous robot with CNN vision gets 15 minutes from a 40 mAh battery because video processing drains faster than actuation.

The Engineering Path Forward

The connectome provides a blueprint, but implementation requires system-level integration:

1. Neuromorphic processors for always-on sensing

Event-driven computation with DVS cameras enables <1 mW idle power. Critical for battery-limited mobile robots.

2. Hierarchical control architectures

Distribute reflexes and pattern generation to local controllers. Reserve central compute for high-level planning. Reduces communication overhead and latency.

3. Task-specific optimization over general intelligence

The dragonfly’s parallel navigation algorithm is simple, fast, and sufficient for 95%+ interception success. General-purpose autonomy is expensive. Narrow, well-defined missions allow exploiting biological efficiency principles.

4. Structural batteries and variable impedance actuators

Structural batteries serve as both energy storage and load-bearing elements, improving payload mass fraction. Variable impedance actuators mimic muscle compliance, reducing energy waste during impacts.

5. Chemical fuels for micro-robotics

At sub-gram scale, metabolic fuel’s 20-40× energy density advantage over batteries is insurmountable with current chemistry. Methanol combustion, hydrogen fuel cells, or cyborg approaches are the only paths to hour-long micro-aerial autonomy.

Credit: IEEE Spectrum

Conclusion: Efficiency is the Constraint

The fruit fly connectome took 38 years after C. elegans because complexity scales exponentially. The same scaling laws govern autonomous robotics: every doubling of capability demands exponentially more energy, computation, and integration complexity.

The fly brain’s 5-10 μW budget for full autonomy isn’t just impressive—it’s the benchmark. Current neuromorphic chips are 1,000-30,000× worse per neuron. Closing this gap requires implementing biological principles: event-driven sparse computation, strong/weak connection asymmetry, recurrent processing over deep hierarchies, and distributed control.

Companies building autonomous systems without addressing energetics will hit a wall—the same wall that kept connectomics at 302 neurons for 38 years. Physics doesn’t care about better perception models if the robot runs out of power before completing useful work.

When robotics achieves even 1% of biological efficiency at scale, truly autonomous micro-robots become feasible. Until then, the scaling laws remain unforgiving. The connectome reveals both how far we are from biological efficiency—and the specific architectural principles required to close that gap.

The message for robotics engineers: efficiency isn’t a feature, it’s the product.

Explore the full FlyWire visualization at https://flywire.ai/

Michael Williams

October 17, 2025 at 1:25 pm

IMHO the energy and complexity gaps indicate that biological brains are doing something qualitatively different than current machine learning systems. It’s not just a matter of efficiency or architecture… we’re looking at two different phenomena that are only superficially similar.

The single human neuron has more chemical interactions per second than New York City has economic transactions per second. It’s not logical to think that a perceptron is an adequate model for a neuron.

Nick Mastronardi

October 22, 2025 at 3:48 am

First, amazing post – thanks for sharing Tim! I am eager to watch, as we encounter connectomes of more complex organisms, the relationship between efficiency and resiliency (robustness or anti-fragility). Efficient delegated systems are great for well defined environments, but when those environments are perturbed, simple creatures often fail and the species leans on numbers and mutations, whereas more complex creatures are better equipped to handle the perturbation as an individual organism instead of a species, which requires some of these delegated autonomous decisions to be connecting back into a higher level: a little slower, and a little more inefficient in the immediate task (like a dragonfly interception), but more prone for survival (like hungry African lions learning to hunt seals on Namibia’s coast). When I was building layers of ML models for pricing, marketing, finance, and supply chain forecasting at Amazon, for some of the goods, the environment was stable and the ML models didn’t need to be re-trained or re-calibrated often. They could be fast and efficient. Other goods were big, important, and in very dynamic and out-sample perturbed environments that we wanted to be very careful with, and that had implications into other systems, so they need to be re-investigated, re-trained, re-calibrated more often and then their findings had to be propagated into other more autonomous dependent systems. Simple biological system efficiency is mesmerizingly beautiful. I’m excited to monitor, in more complex organisms’ connectomes, the efficiency combining with the resiliency (not just physical but neurological).

Alex Cope

October 22, 2025 at 12:06 pm

Brilliant article, Tim! You hit the nail on the head with the efficiency challenge. At Opteran, we’ve been deconstructing those insect brain algorithms for over a decade. Our navigation algorithm is so efficient it runs on edge compute using less than ten watts of power. Mimicking nature is the only way to “make efficiency the product” and it’s why our tech is being tested on the Mars Rover. https://youtu.be/W8oUonvS_i8?si=AWA9SyCN3tGGjnjg