Robot Bees and other Little Robots

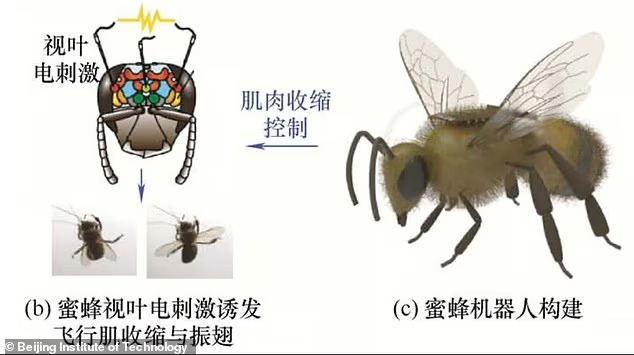

Researchers in China are crafting cyborg bees by fitting them with incredibly thin neural implants (article: Design of flight control system for honeybee based on EEG stimulation). The story isn’t that biology replaces electronics; it’s that biology supplies free actuation, sensing, and ultra low power computation that we can piggyback on with just enough silicon to plug those abilities into our digital world. Through leveraging natural flight capabilities and endurance of biological hosts, you have an ideal covert reconnaissance or delivery platform ideal for military surveillance, counterterrorism operations, physically hacking hardware and disaster relief missions where traditional drones would be too conspicuous or lack the agility to navigate complex environments.

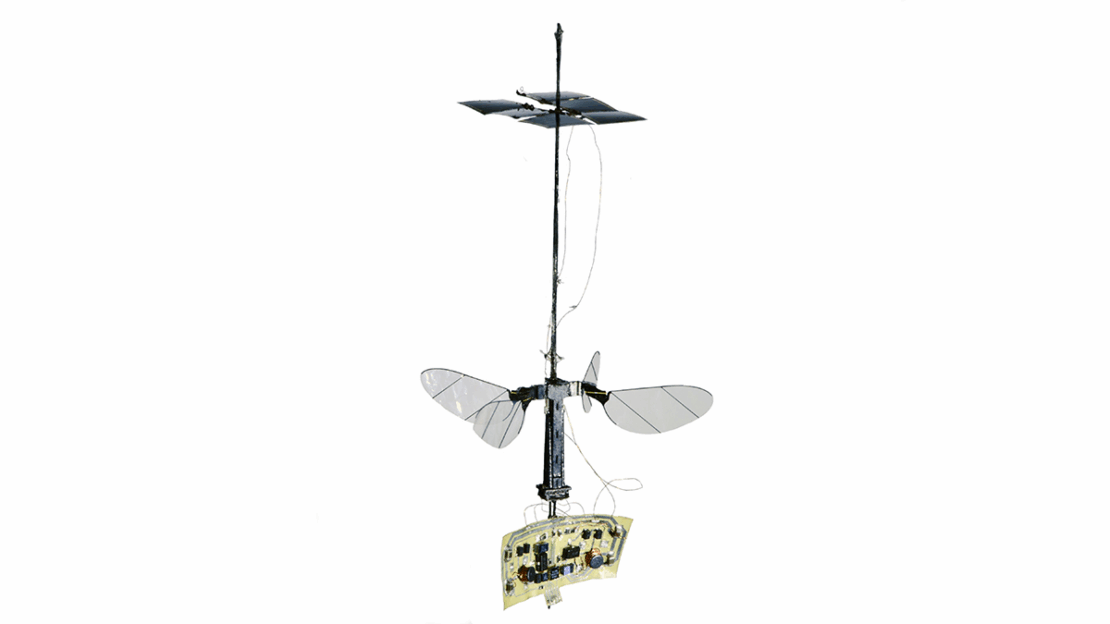

So, there are lots of news articles on this, but the concept of miniature flying machines is far from new. For over a decade before projects like Harvard’s pioneering RoboBee took flight in 2007, researchers were already delving into micro-aerial vehicles (MAVs), driven by the quest for stealthy surveillance and other specialized applications. Early breakthroughs, such as the untethered flight of the Delft University of Technology’s “DelFly,” laid crucial groundwork, proving that insect-scale flight was not just a sci-fi dream but an achievable engineering challenge. This long history underscores a persistent, fascinating pursuit: shrinking aerial capabilities to an almost invisible scale.

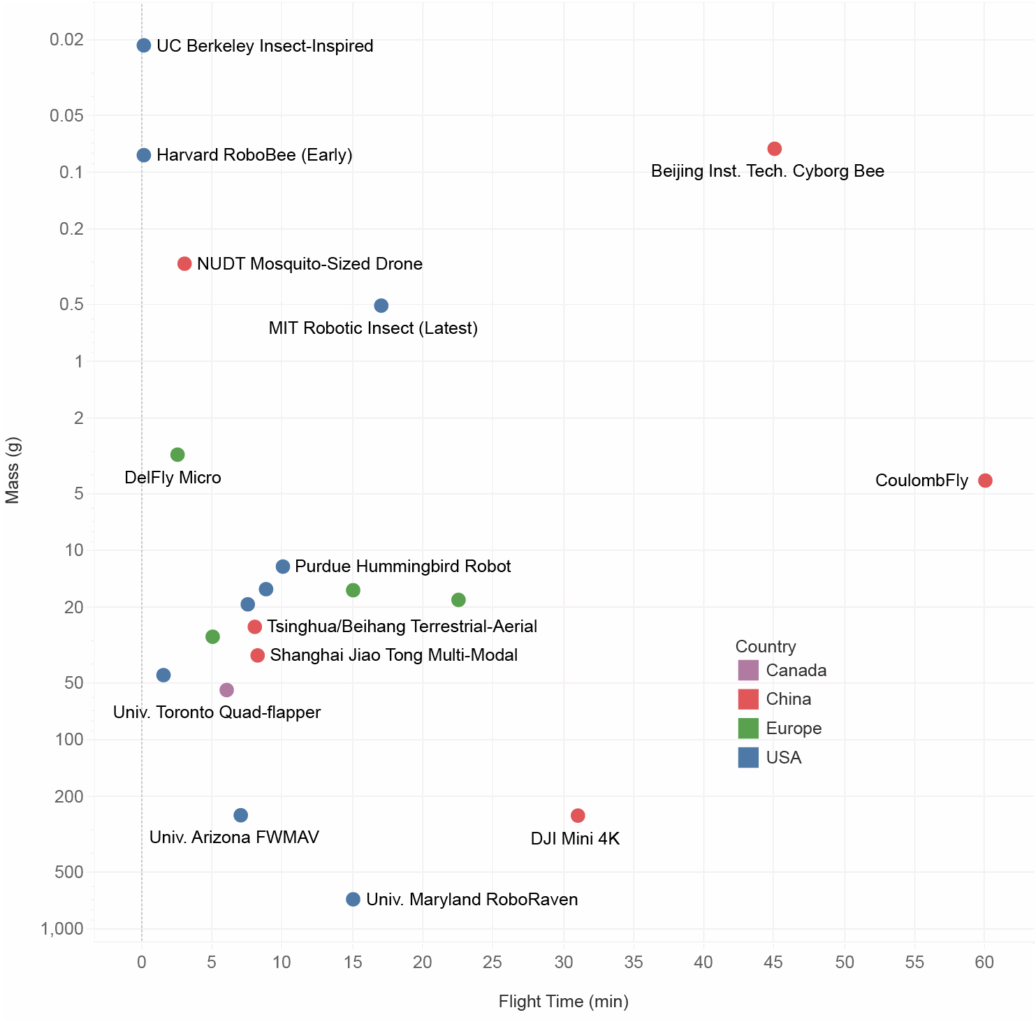

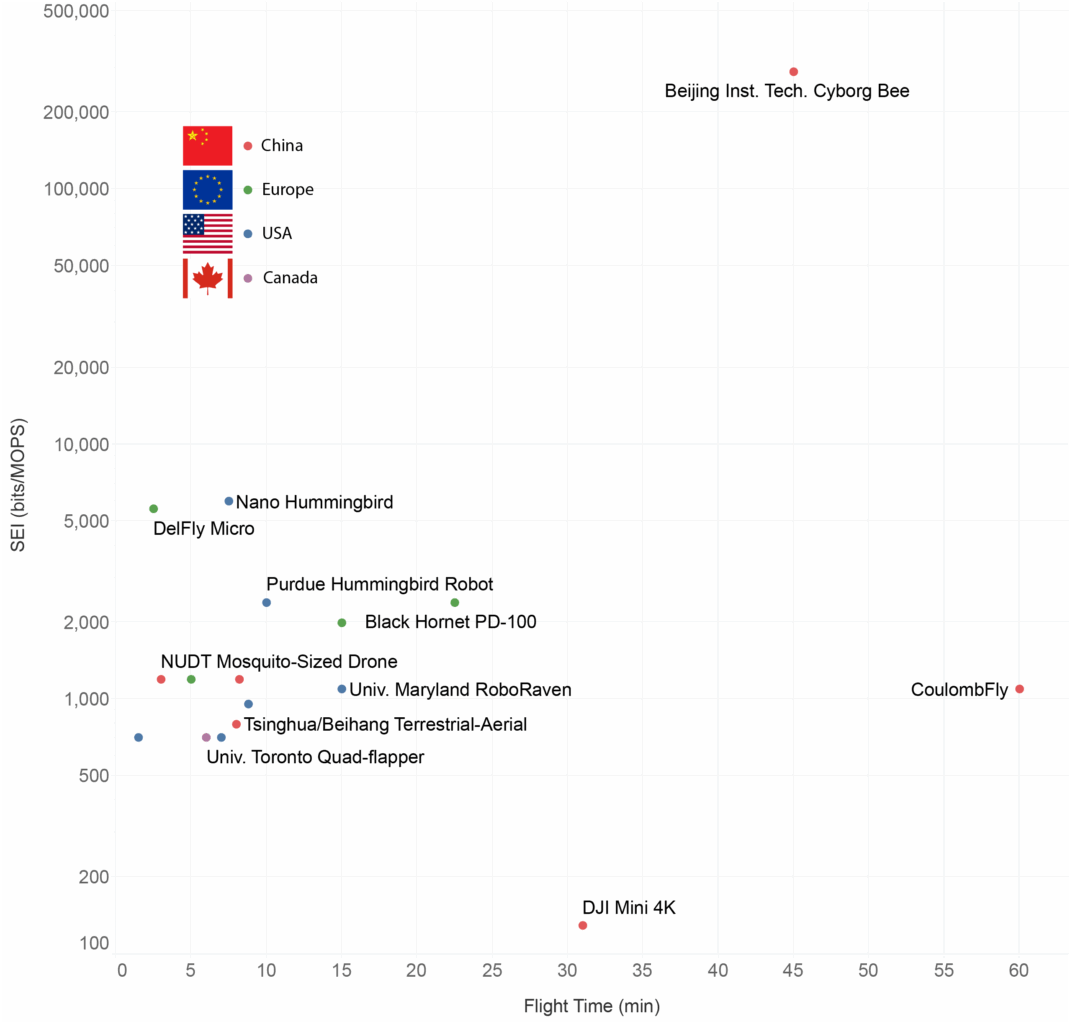

Unlike the “China has a robot Bee!” articles, I wanted to step back and survey this space and where it’s going, and, well, this is kinda a big deal. The plot below reveals the tug-of-war between a robot’s mass and its flight endurance. While a general trend shows heavier robots flying longer, the interesting data are in the extremes. Beijing’s “Cyborg Bee” is a stunning outlier, achieving incredible flight times (around 45 minutes) with a minuscule 0.074-gram control unit by cleverly outsourcing the heavy lifting to a living bee – a biological cheat code for endurance. Conversely, the “NUDT Mosquito-Sized Drone” pushes miniaturization to its absolute limit at 0.3 grams, but sacrifices practical flight time, lasting only a few minutes . This highlights the persistent “holy grail” challenge: building truly insect-scale artificial robots that can fly far–and obey orders. Even cutting-edge artificial designs like MIT’s latest robotic insect, while achieving an impressive 17 minutes of flight, still carry more mass than their biological counterparts. The plot vividly demonstrates that while human ingenuity can shrink technology to astonishing scales, nature’s efficiency in flight remains the ultimate benchmark.

Researchers figured out how to deliver precise electronic pulses that create rough sensory illusions, compelling the bee to execute flight commands—turning left or right, advancing forward, or retreating on demand. And this works. During laboratory demonstrations, these bio-hybrid drones achieved a remarkable 90% command compliance rate, following directional instructions with near-perfect accuracy. By hijacking the bee’s neural pathways, China has effectively weaponized nature’s own engineering, creating a new class of biological surveillance assets that combine the stealth of living organisms with the precision of electronic control systems.

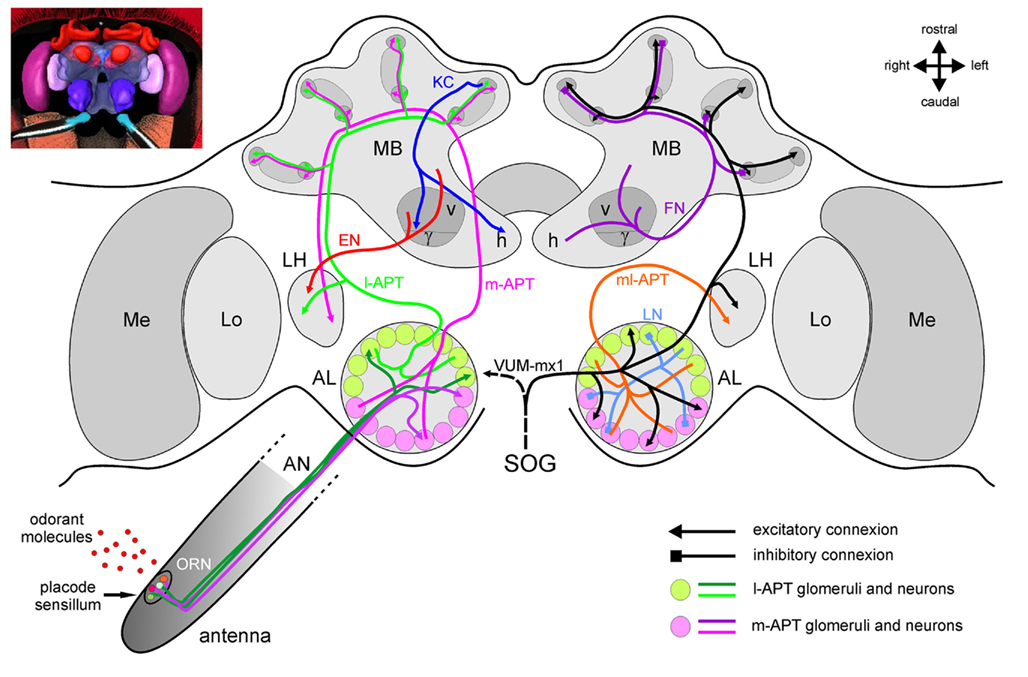

The Beijing team’s breakthrough lies in their precise neural hijacking methodology: three hair-width platinum-iridium micro-needles penetrate the bee’s protocerebrum, delivering charge-balanced biphasic square-wave pulses at a soothing ±20 microamperes—just above the 5-10 µA activation threshold for insect CNS axons yet safely below tissue damage limits. These engineered waveforms, typically running at 200-300 Hz to mimic natural flight central pattern generators, create directional illusions rather than forcing specific muscle commands. Each pulse lasts 100-300 microseconds, optimized to match the chronaxie of 2-4 micrometer diameter insect axons.

Figuring this out is more guessing than careful math. Researchers discovered these “magic numbers” through systematic parametric sweeps across amplitude (5-50 µA), frequency (50-400 Hz), and pulse width (50-500 µs) grids, scoring binary outcomes like “turn angle >30°” until convergence on optimal control surfaces. Modern implementations use closed-loop Bayesian optimization with onboard IMUs and nRF24L01 radios, reducing tuning time from hours to ~90 seconds while adding ±10% amplitude jitter to counteract the bee’s rapid habituation response.

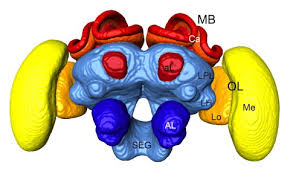

You can’t figure this out on live bees. To get these measurements, the honeybee head capsule is isolated from the body while leaving the entire brain undamaged. The head is cut free and placed into a dye-loaded and cooled ringer solution in a staining chamber for 1 hour, then fixed in a recording chamber, covered with a cover-slip, and imaged under the microscope. After you do this, you can conduct experiments on hundreds of honeybees using low and high current intensity. After stimulation, the Isolated Pulse Stimulator was modulated to generate a dissociating pulse (20 µA DC, 15–20 s), which partially dissociated Fe³⁺ from the stimulating electrode and deposited it in surrounding brain tissue marking where they stimulated for post-mortem analysis.

Precise probe placement relies on decades of accumulated neuroanatomical knowledge. Researchers leverage detailed brain atlases created through electron microscopy and confocal imaging, which map neural structures down to individual synaptic connections. Before inserting stimulation electrodes, they consult these three-dimensional brain maps to target specific neural clusters with sub-millimeter accuracy. During experiments, they verify their targeting using complementary recording techniques: ultra-fine borosilicate glass microelectrodes with 70-120 MΩ tip resistance penetrate individual neurons, capturing their electrical chatter at 20,000 samples per second. These recordings reveal whether the stimulation reaches its intended targets—researchers can literally watch neurons fire in real-time as voltage spikes scroll across their screens, confirming that their three platinum-iridium needles are activating the correct protocerebral circuits. This dual approach—anatomical precision guided by brain atlases combined with live electrophysiological validation—ensures the cyborg control signals reach exactly where they need to go.

What was cool here is that they found a nearly linear relationship between the stimulus burst duration and generated torque. This stimulus-torque characteristic holds for burst durations of up to 500ms. Hierarchical Bayesian modeling revealed that linearity of the stimulus-torque characteristic was generally invariant, with individually varying slopes. This allowed them to get generality through statistical models accounting for individual differences.

Learning the signals is only half the battle. The power challenge defines the system’s ultimate constraints: while a flying bee dissipates ~200 milliwatts mechanically, the cyborg controller must operate within a ~10 mW mass-equivalent budget—about what a drop of nectar weighs. Current 3-milligram micro-LiPo cells provide only ~1 milliwatt-hour, forcing engineers toward energy harvesting solutions like piezoelectric thorax patches that scavenge 20-40 microwatts from wingbeats or thermoelectric films adding single-digit microwatts from body heat. Yes, the bee is the battery.

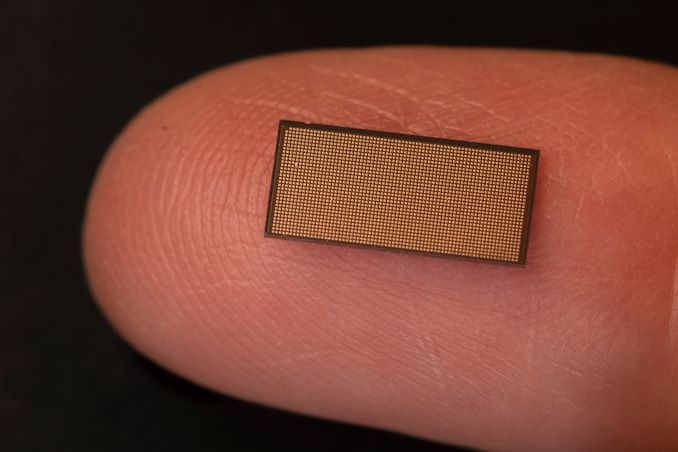

This power scarcity drives the next evolution: rather than imposing external commands, future systems will eavesdrop on the bee’s existing neural traffic—stretch receptors encoding wingbeat amplitude, Johnston’s organs signaling airspeed, and antennal lobe spikes classifying odors with <50ms latency at just 5 µW biological power. Event-driven neuromorphic processors like Intel’s Loihi 2 can decode these spike trains at <50 microjoules per inference, potentially enabling bidirectional brain-computer interfaces where artificial intelligence augments rather than overrides the insect’s 100-million-year-evolved sensorimotor algorithms.

Challenges

Power remains the fundamental bottleneck preventing sustained cyborg control. A flying bee burns through ~200 milliwatts mechanically, but the electronic backpack must survive on a mere ~10 milliwatt mass budget—equivalent to carrying a single drop of nectar. Current micro-LiPo cells weighing 3 milligrams deliver only ~1 milliwatt-hour, barely enough for minutes of operation. Engineers have turned to the bee itself as a power source: piezoelectric patches glued to the thorax harvest 20-40 microwatts from wingbeat vibrations, while thermoelectric films capture single-digit microwatts from body heat. Combined, these provide just enough juice for duty-cycled neural recording and simple radio backscatter—but not continuous control. The thermal constraint is equally brutal: even 5 milliwatts of continuous power dissipation heats the bee’s dorsal thorax by 1°C, disrupting its olfactory navigation. This forces all onboard electronics to operate in the sub-milliwatt range, making every microjoule precious. The solution may lie in passive eavesdropping rather than active control—tapping into the bee’s existing 5-microwatt neural signals instead of imposing power-hungry external commands.

I summarize the rest of the challenges below:

| Challenge | Candidate approach | Practical limits |

|---|---|---|

| RF antenna size | Folded‑loop 2.4 GHz BLE or sub‑GHz LoRa patterns printed on 20 µm polyimide | Needs >30 mm trace length—almost bee‑size; detunes with wing motion |

| Power for Tx | Ambient‑backscatter using 915 MHz carrier (reader on the ground) | 100 µW budget fits; 1 kbps uplink only (ScienceDaily) |

| Network dynamics | Bio‑inspired swarm routing: every node rebroadcasts if SNR improves by Δ>3 dB | Scalability shown in simulation up to 5 000 nodes; real tests at NTU Singapore hit 120 roach‑bots (ScienceDaily) |

| Localization | Fusion of onboard IMU (20 µg) + optic‑flow + Doppler‑based ranging to readers | IMU drift acceptable for ≤30 s missions; longer tasks need visual odometry |

The cyborg bee’s computational supremacy becomes stark when viewed through the lens of task efficiency rather than raw processing power. While silicon-based micro-flyers operate on ARM Cortex processors churning through 20-170 MOPS (mega-operations per second), the bee’s million-neuron brain achieves full visual navigation on just 5 MOPS—neurons firing at a leisurely 5 Hz average. This thousand-fold reduction in arithmetic operations masks a deeper truth: the bee’s sparse, event-driven neural code extracts far more navigational intelligence per computation.

$$ \text{SEI} = \frac{\text{MAP vox}/\text{sec} \times \text{Pose bits}}{\text{MOPS}}$$

To show this, I made up a metric that combines each vehicle’s vision rate, positional accuracy and onboard compute into a single “task‑efficiency” score—the Simultaneous localization and mapping (SLAM)-Efficiency Index (SEI)—so we could compare a honey‑bee brain running on microwatts with silicon drones running on megahertz. Simply put, SEI measures how many bits of world-state knowledge each platform generates per unit of processing. With SEI, the bee achieves 290,000 bits/MOPS—over 100 times better than the best silicon autopilots. Even DJI’s Mini 4K, with its 9,600 MOPS quad-core processor, manages only 420 bits/MOPS despite throwing two thousand times more compute at the problem.

This efficiency gap stems from fundamental architectural differences: bee brains process visual scenes through parallel analog channels that compress optic flow into navigation commands without digitizing every pixel, while our drones waste cycles on frame buffers and matrix multiplications. The bee’s ring-attractor neurons solve path integration as a single rotation-symmetric loop, updating its cognitive map 200 times per second (synchronized with wingbeats) using mere microwatts. Silicon systems attempting equivalent SLAM functionality—feature extraction, bundle adjustment, loop closure—burn 5-15 watts on embedded GPUs. Until neuromorphic processors can match the bee’s event-driven, power-sipping architecture, cyborg insects will remain the only sub-gram platforms capable of autonomous navigation. The chart’s below tells the story on a logarithmic scale: that lonely dot at 290,000 SEI represents not just superior engineering, but a fundamentally different computational paradigm. Note that flapping‑wing robots cluster below 200 MOPS because that’s all a 168 MHz Cortex‑M4 can supply; adding bigger processors blows the weight budget at these sizes.

The race to miniaturize autonomous flight reveals a fundamental truth about computation: raw processing power means nothing without the right architecture. While teams worldwide chase incremental improvements in battery life and chip efficiency, Beijing’s cyborg bee has leverages biology to solve this problem. Just as xAI’s Grok 4 throws trillion-parameter models at language understanding while smaller, cleaner models achieve similar results with careful data curation, bees handle similar complexity with microwatts. The lesson isn’t to abandon silicon for biology, but to recognize that sparse, event-driven architectures trump dense matrix multiplications when every microjoule counts.

Looking forward, the convergence is inevitable: neuromorphic processors like Intel’s Loihi 2 are beginning to bridge the efficiency gap, while cyborg systems like Beijing’s bee point the way toward hybrid architectures that leverage both biological and silicon strengths. The real breakthrough won’t come from making smaller batteries or faster processors—it will come from fundamentally rethinking how we encode, embed, and process information. Whether it’s Scale AI proving that better algorithms beat bigger models, or a bee’s ring-attractor neurons solving navigation with analog elegance, the message is clear: in the contest between brute force and biological intelligence, nature still holds most of the cards.

Sevgi Gurbuz

July 15, 2025 at 5:25 pm

Nice article, thought you also might like this :

NC State Cyborg Insect Networks

https://research.ece.ncsu.edu/aros/project/cyborg-insect-network/

November 18, 2016 – Seeker – Drones and Cyborg Roaches Team Up to Map Disaster Zones

November 17, 2016 – TechXplore – Tech would use Drones and Insect Biobots to Map Disaster Areas

July 22, 2015 – New Scientist – Cyborg Cockroach and Drone Teams can Locate Disaster Survivors

December 13, 2013 – IEEE Spectrum – Cyborg Cockroaches to the Rescue

October 18, 2013 – The Wall Street Journal – Send In the Cockroach Squad

October 16, 2013 – Gigaom – Cyborg Insects could Map Collapsed Buildings for First Responders

October 16, 2013 – NBC News – Don’t Panic! These Cyborg Roaches are Trained for Emergencies

October 16, 2013 – NCSU News – Software Uses Cyborg Swarm to Map Unknown Environs

George

July 15, 2025 at 6:50 pm

This is seriously cool write up, including the 50% of it that buzzed right over my head.

Mooch

July 16, 2025 at 3:03 pm

Apart from the graphic description of the multiple heads of bees required for the tech development I’m going to almost concede defeat, in that, they (the adversary) seem to have succeeded in finding the elusive free lunch. It is not free, of course, it’s costing the bee significantly. To me…there is nothing operationally relevant about the honey bee… the tech, however, points to the use of nature’s real power source…the evolution of the albatross. If you can control an albatross’s brain, you’ve bought 1 year, non-stop, flight over denied territory. Useful payloads…easy to find… at the carry weight of an albatross. I hope this is not where we are headed…but clearly it’s the next step for China. And it will work much better than a honey bee.

Dan Stagg

August 1, 2025 at 9:11 pm

Tim

Good to see your mind is active as ever. Nice work. I particularly like the Sparse model and efficient data structure thinking. As an ME lost in a EE century- I was jonesing on the power thermal part. Micro power thermal is the limfac